Summary

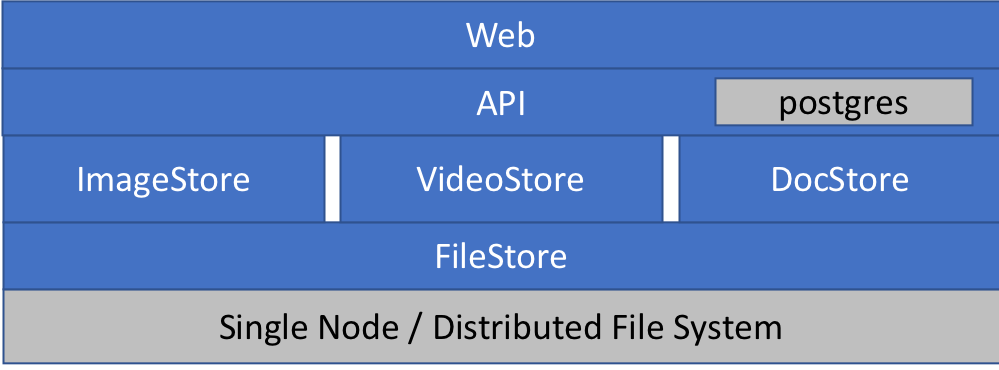

- Problem: Multi-model workloads are the norm: A/B tests, customer fine-tunes, safety variants, multi-stage pipelines. GPU memory scales linearly with model count, and VRAM is the limiting resource.

- Solution: Tensor deduplication automatically identifies and shares bit-identical weight tensors across models, requiring no checkpoint modifications.

- Results: Across diffusion and LLM workloads, real-world savings range from 3–32%. DeepFloyd IF stages share 18.87 GB (32% reduction). Synthetic upper bound is 50%.

- Overhead: Hashing adds <1% to model load time. Zero runtime overhead since the forward pass is unchanged.

- Compatibility: Works with HuggingFace safetensors, GGUF, and Diffusers pipelines. No changes to training or checkpoints required.

Multi-Model Memory Bloat

Modern inference deployments rarely serve a single model. Production systems routinely load:

[Read More]